With Service Workers, we gave developers a way to solve network connection. You get control over caching and how requests are handled. That means you get to create your own patterns. Take a look at a few possible patterns in isolation, but in practice, you'll likely use them in tandem, depending on URL and context.

For a working demo of some of these patterns, see Trained-to-thrill.

When to store resources

Service workers let you handle requests independently from caching, so I'll demonstrate them separately. First up, determining when you should use cache.

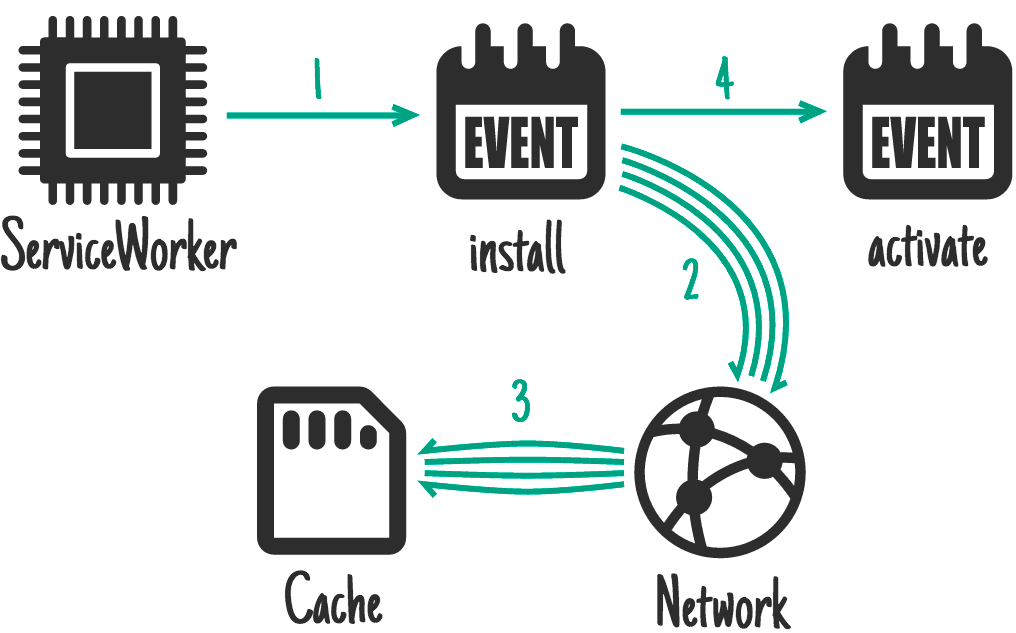

On install, as a dependency

The Service Worker API gives you an install event. You can use this to get

stuff ready, stuff that must be ready before you handle other events. During

install, previous versions of your service worker keep running and serving

pages. Whatever you do at this time shouldn't disrupt the existing

service worker.

Ideal for: CSS, images, fonts, JS, templates, or anything else you'd consider static to that version of your site.

Fetch the things that would make your site entirely unfunctional if they failed to be fetched, things an equivalent platform-specific app would make part of the initial download.

self.addEventListener('install', function (event) {

event.waitUntil(

caches.open('mysite-static-v3').then(function (cache) {

return cache.addAll([

'/css/whatever-v3.css',

'/css/imgs/sprites-v6.png',

'/css/fonts/whatever-v8.woff',

'/js/all-min-v4.js',

// etc.

]);

}),

);

});

event.waitUntil takes a promise to define the length and success of the install. If the promise

rejects, the installation is considered a failure and this Service Worker is abandoned (if an

older version is running, it'll be left intact). caches.open() and cache.addAll() return promises.

If any of the resources fail to be fetched, the cache.addAll() call rejects.

On trained-to-thrill I use this to cache static assets.

On install, not as a dependency

This is similar to installing as a dependency, but won't delay install completing and won't cause installation to fail if caching fails.

Ideal for: Bigger resources that aren't needed straight away, such as assets for later levels of a game.

self.addEventListener('install', function (event) {

event.waitUntil(

caches.open('mygame-core-v1').then(function (cache) {

cache

.addAll

// levels 11-20

();

return cache

.addAll

// core assets and levels 1-10

();

}),

);

});

This example does not pass the cache.addAll promise for levels 11–20 back to

event.waitUntil, so even if it fails, the game will still be available offline. Of course, you'll

have to cater for the possible absence of those levels and reattempt caching them if they're

missing.

The service worker may be killed while levels 11–20 download since it's finished handling events, meaning they won't be cached. Web Periodic Background Synchronization API can handle cases like this, and larger downloads such as movies.

On activate

Ideal for: clean-up and migration.

Once a new service worker has installed and a previous version isn't being used, the new one

activates, and you get an activate event. Because the previous version is out of the way, it's a good

time to handle

schema migrations in IndexedDB

and also delete unused caches.

self.addEventListener('activate', function (event) {

event.waitUntil(

caches.keys().then(function (cacheNames) {

return Promise.all(

cacheNames

.filter(function (cacheName) {

// Return true if you want to remove this cache,

// but remember that caches are shared across

// the whole origin

})

.map(function (cacheName) {

return caches.delete(cacheName);

}),

);

}),

);

});

During activation, events such as fetch are put into a queue, thus a long activation could

block page loads. Keep your activation as lean as possible, and only use it for things you

couldn't do while the previous version was active.

On trained-to-thrill I use this to remove old caches.

On user interaction

Ideal for: when the whole site can't be taken offline, and you chose to allow the user to select the content they want available offline. E.g. a video on something like YouTube, an article on Wikipedia, a particular gallery on Flickr.

Give the user a "Read later" or "Save for offline" button. When it's clicked, fetch what you need from the network and pop it in the cache.

document.querySelector('.cache-article').addEventListener('click', function (event) {

event.preventDefault();

var id = this.dataset.articleId;

caches.open('mysite-article-' + id).then(function (cache) {

fetch('/get-article-urls?id=' + id)

.then(function (response) {

// /get-article-urls returns a JSON-encoded array of

// resource URLs that a given article depends on

return response.json();

})

.then(function (urls) {

cache.addAll(urls);

});

});

});

The Cache API is available from pages and service workers, which means you can add to the cache directly from the page.

On network response

Ideal for: frequently updating resources such as a user's inbox, or article contents. Also useful for non-essential content such as avatars, but care is needed.

If a request doesn't match anything in the cache, get it from the network, send it to the page, and add it to the cache at the same time.

If you do this for a range of URLs, such as avatars, you'll need to be careful you don't bloat the storage of your origin. If the user needs to reclaim disk space you don't want to be the prime candidate. Make sure you get rid of items in the cache you don't need any more.

self.addEventListener('fetch', function (event) {

event.respondWith(

caches.open('mysite-dynamic').then(function (cache) {

return cache.match(event.request).then(function (response) {

return (

response ||

fetch(event.request).then(function (response) {

cache.put(event.request, response.clone());

return response;

})

);

});

}),

);

});

To allow for efficient memory usage, you can only read a response/request's body once. The code

sample uses .clone() to create additional

copies that can be read separately.

On trained-to-thrill I use this to cache Flickr images.

Stale-while-revalidate

Ideal for: frequently updating resources where having the very latest version is non-essential. Avatars can fall into this category.

If there's a cached version available, use it, but fetch an update for next time.

self.addEventListener('fetch', function (event) {

event.respondWith(

caches.open('mysite-dynamic').then(function (cache) {

return cache.match(event.request).then(function (response) {

var fetchPromise = fetch(event.request).then(function (networkResponse) {

cache.put(event.request, networkResponse.clone());

return networkResponse;

});

return response || fetchPromise;

});

}),

);

});

This is very similar to HTTP's stale-while-revalidate.

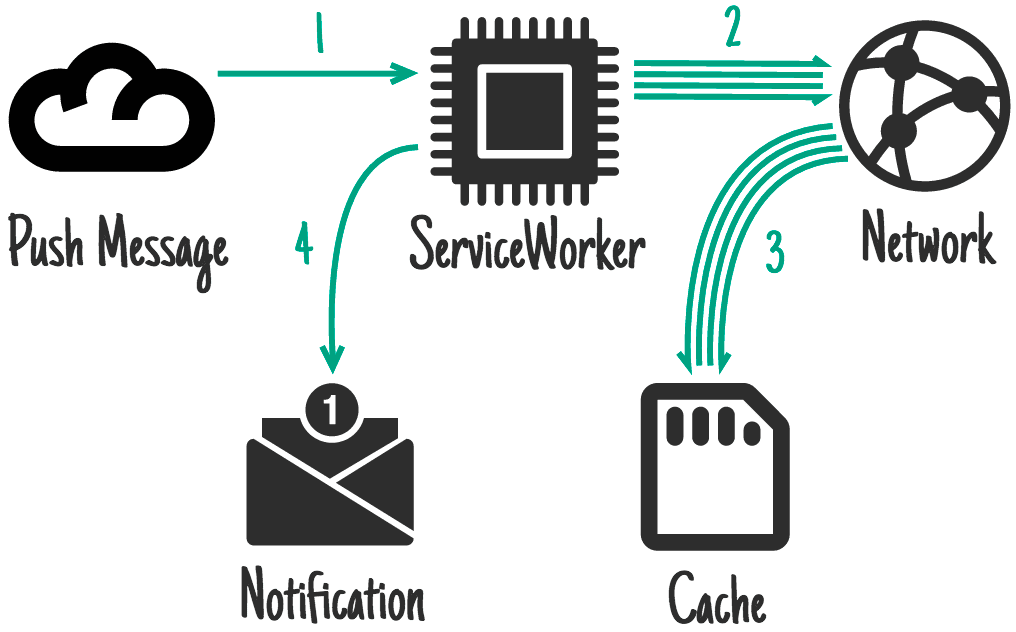

On push message

The Push API is another feature built on top of service worker. This allows the service worker to be awoken in response to a message from the OS's messaging service. This happens even when the user doesn't have a tab open to your site. Only the service worker is woken up. You request permission to do this from a page and the user is prompted.

Ideal for: content relating to a notification, such as a chat message, a breaking news story, or an email. Also infrequently changing content that benefits from immediate sync, such as a to-do list update or a calendar alteration.

The common final outcome is a notification which, when tapped, opens and focuses a relevant page, and for which updating caches beforehand is extremely important. The user is online at the time of receiving the push message, but they may not be when they finally interact with the notification, so it's critical to make this content available offline.

This code updates caches before showing a notification:

self.addEventListener('push', function (event) {

if (event.data.text() == 'new-email') {

event.waitUntil(

caches

.open('mysite-dynamic')

.then(function (cache) {

return fetch('/inbox.json').then(function (response) {

cache.put('/inbox.json', response.clone());

return response.json();

});

})

.then(function (emails) {

registration.showNotification('New email', {

body: 'From ' + emails[0].from.name,

tag: 'new-email',

});

}),

);

}

});

self.addEventListener('notificationclick', function (event) {

if (event.notification.tag == 'new-email') {

// Assume that all of the resources needed to render

// /inbox/ have previously been cached, e.g. as part

// of the install handler.

new WindowClient('/inbox/');

}

});

On background-sync

Background sync is another feature built on top of service worker. It lets you to request background data synchronization as a one-off, or on an (extremely heuristic) interval. This happens even when the user doesn't have a tab open to your site. Only the service worker is woken up. You request permission to do this from a page and the user is prompted.

Ideal for: non-urgent updates, especially those that happen so regularly that a push message per update would be too frequent for users, such as social timelines or news articles.

self.addEventListener('sync', function (event) {

if (event.id == 'update-leaderboard') {

event.waitUntil(

caches.open('mygame-dynamic').then(function (cache) {

return cache.add('/leaderboard.json');

}),

);

}

});

Cache persistence

Your origin is given a certain amount of free space to do what it wants with. That free space is shared between all origin storage: (local) Storage, IndexedDB, File System Access, and of course Caches.

The amount you get isn't spec'd. It differs depending on device and storage conditions. You can find out how much you've got with:

if (navigator.storage && navigator.storage.estimate) {

const quota = await navigator.storage.estimate();

// quota.usage -> Number of bytes used.

// quota.quota -> Maximum number of bytes available.

const percentageUsed = (quota.usage / quota.quota) * 100;

console.log(`You've used ${percentageUsed}% of the available storage.`);

const remaining = quota.quota - quota.usage;

console.log(`You can write up to ${remaining} more bytes.`);

}

However, like all browser storage, the browser is free to throw away your data if the device comes under storage pressure. Unfortunately the browser can't tell the difference between those movies you want to keep at all costs, and the game you don't really care about.

To work around this, use the StorageManager interface:

// From a page:

navigator.storage.persist()

.then(function(persisted) {

if (persisted) {

// Hurrah, your data is here to stay!

} else {

// So sad, your data may get chucked. Sorry.

});

Of course, the user has to grant permission. For this, use the Permissions API.

Making the user part of this flow is important, as we can now expect them to be in control of deletion. If their device comes under storage pressure, and clearing non-essential data doesn't solve it, the user gets to judge which items to keep and remove.

For this to work, it requires operating systems to treat "durable" origins as equivalent to platform-specific apps in their breakdowns of storage usage, rather than reporting the browser as a single item.

Serving suggestions

It doesn't matter how much caching you do, the service worker only uses the cache when you tell it when and how. Here are a few patterns to handle requests:

Cache only

Ideal for: anything you'd consider static to a particular "version" of your site. You should have cached these in the install event, so you can depend on them being there.

self.addEventListener('fetch', function (event) {

// If a match isn't found in the cache, the response

// will look like a connection error

event.respondWith(caches.match(event.request));

});

…although you don't often need to handle this case specifically, Cache, falling back to network covers it.

Network only

Ideal for: things that have no offline equivalent, such as analytics pings, non-GET requests.

self.addEventListener('fetch', function (event) {

event.respondWith(fetch(event.request));

// or don't call event.respondWith, which

// will result in default browser behavior

});

…although you don't often need to handle this case specifically, Cache, falling back to network covers it.

Cache, falling back to network

Ideal for: building offline-first. In such cases, this is how you'll handle the majority of requests. Other patterns are exceptions based on the incoming request.

self.addEventListener('fetch', function (event) {

event.respondWith(

caches.match(event.request).then(function (response) {

return response || fetch(event.request);

}),

);

});

This gives you the "cache only" behavior for things in the cache and the "network only" behavior for anything not-cached (which includes all non-GET requests, as they cannot be cached).

Cache and network race

Ideal for: small assets where you're chasing performance on devices with slow disk access.

With some combinations of older hard drives, virus scanners, and faster internet connections, getting resources from the network can be quicker than going to disk. However, going to the network when the user has the content on their device can be a waste of data, so bear that in mind.

// Promise.race rejects when a promise rejects before fulfilling.

// To make a race function:

function promiseAny(promises) {

return new Promise((resolve, reject) => {

// make sure promises are all promises

promises = promises.map((p) => Promise.resolve(p));

// resolve this promise as soon as one resolves

promises.forEach((p) => p.then(resolve));

// reject if all promises reject

promises.reduce((a, b) => a.catch(() => b)).catch(() => reject(Error('All failed')));

});

}

self.addEventListener('fetch', function (event) {

event.respondWith(promiseAny([caches.match(event.request), fetch(event.request)]));

});

Network falling back to cache

Ideal for: a quick-fix for resources that update frequently, outside of the "version" of the site. E.g. articles, avatars, social media timelines, and game leaderboards.

This means you give online users the most up-to-date content, but offline users get an older cached version. If the network request succeeds you'll most likely want to update the cache entry.

However, this method has flaws. If the user has an intermittent or slow connection they'll have to wait for the network to fail before they get the perfectly acceptable content already on their device. This can take an extremely long time and is a frustrating user experience. See the next pattern, Cache then network, for a better solution.

self.addEventListener('fetch', function (event) {

event.respondWith(

fetch(event.request).catch(function () {

return caches.match(event.request);

}),

);

});

Cache then network

Ideal for: content that updates frequently. E.g. articles, social media timelines, and games. leaderboards.

This requires the page to make two requests, one to the cache, and one to the network. The idea is to show the cached data first, then update the page when and if the network data arrives.

Sometimes you can just replace the current data when new data arrives (such as a game leaderboard), but that can be disruptive with larger pieces of content. Basically, don't "disappear" something the user may be reading or interacting with.

Twitter adds the new content above the old content and adjusts the scroll position so the user is uninterrupted. This is possible because Twitter retains a mostly-linear order to content. I copied this pattern for trained-to-thrill to get content on screen as fast as possible, while displaying up-to-date content as soon as it arrives.

Code in the page:

var networkDataReceived = false;

startSpinner();

// fetch fresh data

var networkUpdate = fetch('/data.json')

.then(function (response) {

return response.json();

})

.then(function (data) {

networkDataReceived = true;

updatePage(data);

});

// fetch cached data

caches

.match('/data.json')

.then(function (response) {

if (!response) throw Error('No data');

return response.json();

})

.then(function (data) {

// don't overwrite newer network data

if (!networkDataReceived) {

updatePage(data);

}

})

.catch(function () {

// we didn't get cached data, the network is our last hope:

return networkUpdate;

})

.catch(showErrorMessage)

.then(stopSpinner);

Code in the service worker:

You should always go to the network and update a cache as you go.

self.addEventListener('fetch', function (event) {

event.respondWith(

caches.open('mysite-dynamic').then(function (cache) {

return fetch(event.request).then(function (response) {

cache.put(event.request, response.clone());

return response;

});

}),

);

});

In trained-to-thrill I worked around this by using XHR instead of fetch, and abusing the Accept header to tell the service worker where to get the result from (page code, service worker code).

Generic fallback

If you fail to serve something from the cache or network, provide a generic fallback.

Ideal for: secondary imagery such as avatars, failed POST requests, and an "Unavailable while offline" page.

self.addEventListener('fetch', function (event) {

event.respondWith(

// Try the cache

caches

.match(event.request)

.then(function (response) {

// Fall back to network

return response || fetch(event.request);

})

.catch(function () {

// If both fail, show a generic fallback:

return caches.match('/offline.html');

// However, in reality you'd have many different

// fallbacks, depending on URL and headers.

// Eg, a fallback silhouette image for avatars.

}),

);

});

The item you fallback to is likely to be an install dependency.

If your page is posting an email, your service worker may fall back to storing the email in an IndexedDB outbox and respond by telling the page that the send failed but the data was successfully retained.

Service worker-side templating

Ideal for: pages that cannot have their server response cached.

It's faster to render pages on the server, but that can mean including state data that may not make sense in a cache, such as sign-in state. If your page is controlled by a service worker, you could choose to request JSON data along with a template and render that instead.

importScripts('templating-engine.js');

self.addEventListener('fetch', function (event) {

var requestURL = new URL(event.request.url);

event.respondWith(

Promise.all([

caches.match('/article-template.html').then(function (response) {

return response.text();

}),

caches.match(requestURL.path + '.json').then(function (response) {

return response.json();

}),

]).then(function (responses) {

var template = responses[0];

var data = responses[1];

return new Response(renderTemplate(template, data), {

headers: {

'Content-Type': 'text/html',

},

});

}),

);

});

Put it together

You aren't limited to one of these methods. In fact, you'll likely use many of them depending on request URL. For example, trained-to-thrill uses:

- Cache on install, for the static UI and behavior

- Cache on network response, for the Flickr images and data

- Fetch from cache, falling back to network, for most requests

- Fetch from cache, then network, for the Flickr search results

Just look at the request and decide what to do:

self.addEventListener('fetch', function (event) {

// Parse the URL:

var requestURL = new URL(event.request.url);

// Handle requests to a particular host specifically

if (requestURL.hostname == 'api.example.com') {

event.respondWith(/* some combination of patterns */);

return;

}

// Routing for local URLs

if (requestURL.origin == location.origin) {

// Handle article URLs

if (/^\/article\//.test(requestURL.pathname)) {

event.respondWith(/* some other combination of patterns */);

return;

}

if (/\.webp$/.test(requestURL.pathname)) {

event.respondWith(/* some other combination of patterns */);

return;

}

if (request.method == 'POST') {

event.respondWith(/* some other combination of patterns */);

return;

}

if (/cheese/.test(requestURL.pathname)) {

event.respondWith(

new Response('Flagrant cheese error', {

status: 512,

}),

);

return;

}

}

// A sensible default pattern

event.respondWith(

caches.match(event.request).then(function (response) {

return response || fetch(event.request);

}),

);

});

Further reading

- Service workers and the Cache Storage API

- JavaScript Promises—an Introduction: Guide to promises

Credits

For the lovely icons:

- Code by buzzyrobot

- Calendar by Scott Lewis

- Network by Ben Rizzo

- SD by Thomas Le Bas

- CPU by iconsmind.com

- Trash by trasnik

- Notification by @daosme

- Layout by Mister Pixel

- Cloud by P.J. Onori

And thanks to Jeff Posnick for catching many howling errors before I hit "publish".